Content from Virtual Environments

Last updated on 2025-09-18 | Edit this page

Overview

Questions

- What is a Virtual Environment? / Why use a Virtual Environment?

- How do I create a Virtual Environment?

Objectives

- Create a new virtual environment using

uv - Push our new project to a GitHub repository.

What is a Virtual Environment?

A virtual environment is an isolated workspace where you can install python packages and run python code without worrying about affecting the tools, executables, and packages installed in either the global python environment or in other projects.

What is the difference between a “package manager” and a “virtual environment”?

A package manager helps automate the process of installing, upgrading, and removing software packages. Each package is usually built on top of several other packages, and rely on the methods and objects provided. However as projects are upgraded and changed over time, the available methods and objects can change. A package manager solves the complex “dependency web” created by the packages you would like to install and finds the version of all required packages that meets your needs.

Why Would I use a Virtual Environment?

If you are only ever working on your own projects, or on scripts for a single project, it’s absolutely fine to never worry about virtual environments. But as soon as you start creating new projects working on code written by other people, it becomes incredibly important to know that the code that you are running is running on the exact same versions of libraries.

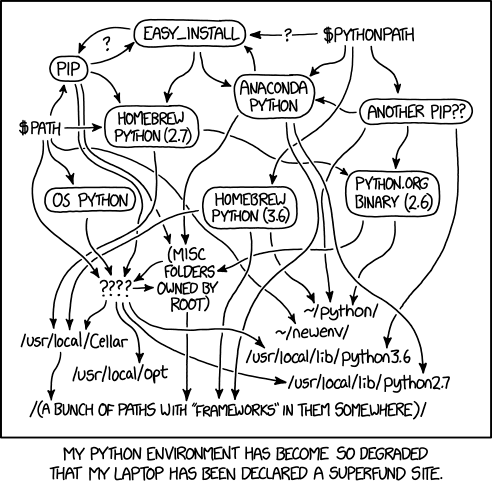

In the past, it was notoriously difficult to manage environments with python:

There have been a number of attempts to create a “one size fits all” approach to virtual environments and dependency management:

- venv

- virtualenv

- conda

- pipenv

- pyenv

- poetry

We’re going to use uv for the purposes of this workshop. UV is a another tool that promises to slot in to the needs of environment and dependency management, however there are a few key elements that set it apart:

- It is written in Rust, which gives it a significant speed improvement over pip and conda.

- It works with the

pyproject.tomlanduv.lockfiles, which allow for human and computer readable project files. - It can install and manage it’s own python versions.

- It works as a drop-in replacement for pip, eliminating the need to learn new commands.

Creating a project with UV

Before the workshop, you should have had a chance to install and check that your python and uv executables were working. If you have not yet had a chance to do this, please refer to the setup page for this workshop.

We’re going to start with a totally blank project, so let’s create a

directory called “textanalysis-tool”. Navigate to this directory in your

command line. Let’s quickly make sure we have UV installed and working

by typing uv --version. You should see something like the

following (the exact version number might be different):

We can start off with a new project with UV by running the command

uv init. This will automatically create a couple files for

us:

We can see that there are a few files created by this command:

-

.python-version: This file is used to optionally specify the Python version for the project. -

main.py: This is the main Python script for the project. -

pyproject.toml: This file is used to manage project dependencies and settings. -

README.md: This file contains human written information about the project. -

.gitignore: (Depending on your version of uv) This file specifies files and directories that should be ignored by git.

If we take a look at the pyproject.toml file, we can see

that it contains some basic information about our project in a fairly

readable format:

TOML

[project]

name = "textanalysis-tool-{my-name}"

version = "0.1.0"

description = "Add your description here"

readme = "README.md"

requires-python = ">=3.13"

dependencies = []The requires-python field may vary depending on the

exact version of python you’re working with.

Make sure to change {my-name} in the name

field to something unique, such as your GitHub username. This is

important later when we upload our package to TestPyPI, as package names

must be unique.

Creating a Virtual Environment

To create a virtual environment with UV, we can use the

uv venv command. This will create a new virtual environment

in a directory called .venv within our project folder.

Before we activate our environment, let’s quickly check the location of the current python executable you are using is by starting a python interpreter and running the following commands:

Depending on your operating system, you may need to type

python3 instead of python to start the

interpreter.

You can type exit to leave the python interpreter

You should see the path to the location of the python executable on

your machine. Now let’s activate our environment. The exact command will

depend on your operating system, but if you look above the python code

to the output of the uv venv command, you should see the

correct command.

If this command works properly, you should see that before your prompt is now some text in parenthesis:

(textanalysis-tool) D:\Documents\Projects\textanalysis-tool>Let’s start up the python interpreter again and check the location of our executable:

What you should now see is that the executable is located in the .venv/Scripts directory of our project:

(textanalysis-tool) D:\Documents\Projects\textanalysis-tool>python

Python 3.13.7 (tags/v3.13.7:bcee1c3, Aug 14 2025, 14:15:11) [MSC v.1944 64 bit (AMD64)] on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import sys

>>> sys.executable

'D:\\Documents\\Projects\\textanalysis-tool\\.venv\\Scripts\\python.exe'Exit out of the interpreter and deactivate the virtual environment

with deactivate.

Git Commit and Pushing to our Repository

Some versions of uv will automatically create a

.gitignore file when you run uv init. If you

don’t see one in your project folder, you can create one manually.

We also want to create another file called .gitignore,

to control which files are added to our git repository. It’s generally a

good idea to create this file early on, and update it whenever you

notice files or folders you want to explicitly prevent from being added

to the repository.

We can create a gitignore from the command line with

type nul > .gitignore (Windows), or

touch .gitignore (Mac/Linux). There are several pre-written

gitignores that we can optionally use, but for this project we’ll

maintain our own. Open up the file and add the following lines to

it:

__pycache__/

dist/

*.egg-info/

scratch/A commonly used gitignore is the Python.gitignore maintained by GitHub. You can find it here.

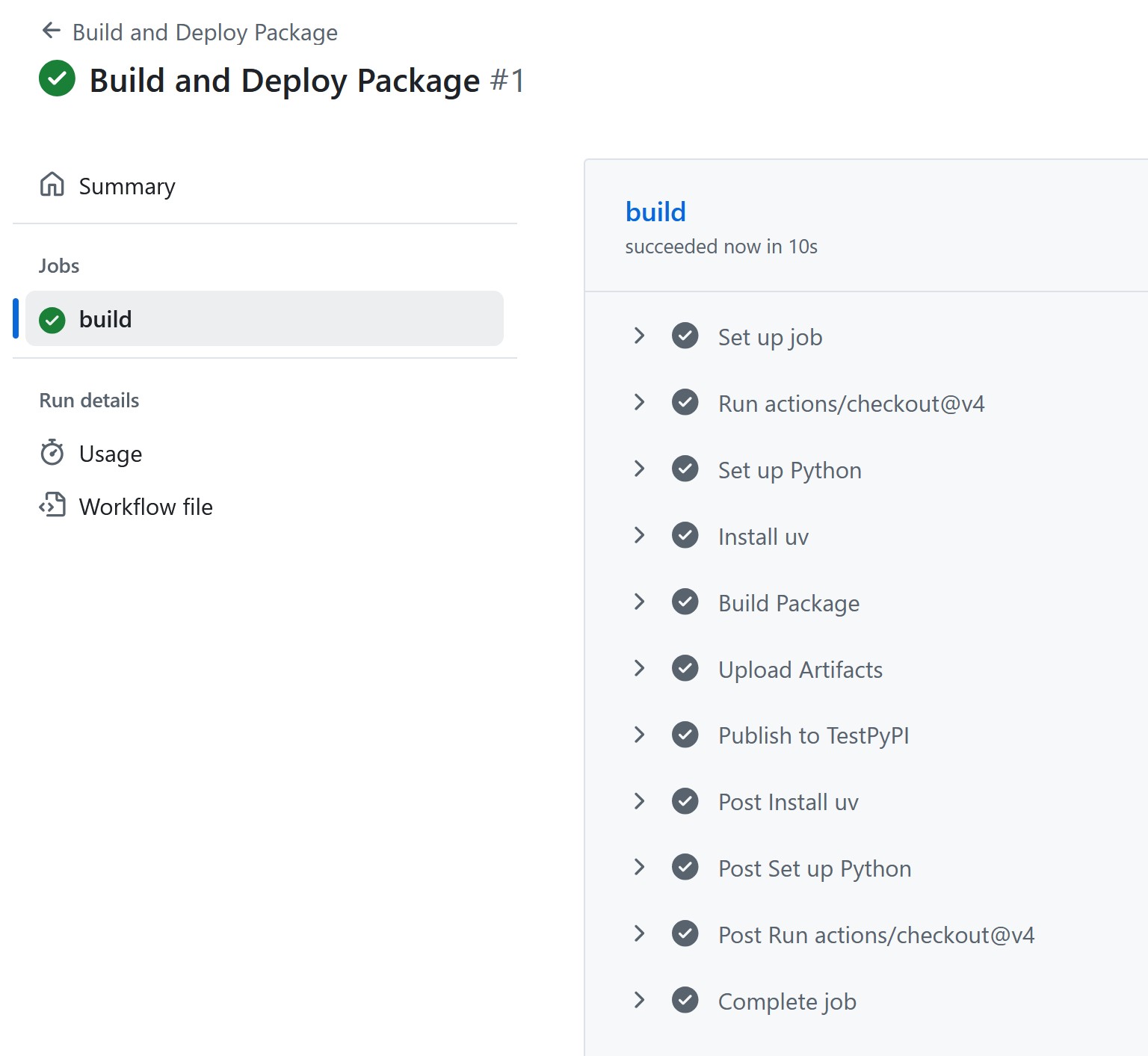

Next, let’s set up a repository on GitHub to store our code. We’ll make an entirely blank repository, with the same name as our project: “textanalysis-tool”.

We’re creating the files on our local machine first, then the remote repository. There’s no reason you can’t go the other way around, creating the remote repository then cloning it to your local machine.

First, we’ll initialize a git repository locally, making an initial commit with the files that uv generated:

git init

git add .gitignore .python-version README.md main.py pyproject.toml

git commit -m "Initial commit"Then we’ll follow the directions for creating a new repository:

git remote add origin https://github.com/{username}/textanalysis-tool.git

git branch -M main

git push -u origin mainWe are using https for our remote URL, but you can also

use ssh if you have that set up.

If all goes well, we’ll see our code appear in the new repository:

And with that, we’re ready to start writing our tool!

Challenge 1: Adding a Package Dependency

Now that we have our project set up, let’s add a project dependency.

Later on in the workshop, we’ll be parsing HTML documents, so let’s add

the beautifulsoup4 package to our project.

Try the following command

uv add beautifulsoup4Take a look at the pyproject.toml and

uv.lock files. What changed? What is the purpose of each

file?

What is the difference between the command

uv add beautifulsoup4 and

uv pip install beautifulsoup4?

The pyproject.toml file is a human readable file that

contains the list of packages that our project depends on. The

uv.lock file is a machine readable file that contains the

exact versions of all packages that were installed, including any

dependencies of the packages we explicitly installed.

uv add will add the package to the

pyproject.toml file, and install the package into our

virtual environment. uv pip install will install the

package into our virtual environment, but will not add it to the

pyproject.toml file.

- Setting up a virtual environment is useful for managing project dependencies.

- Using

uvsimplifies the process of creating and managing virtual environments. - There are several options other than

uvfor managing virtual environments, such asvenvandconda. - It’s important to version control your project from the start,

including a

.gitignorefile.

Content from Creating A Module

Last updated on 2025-09-28 | Edit this page

Overview

Questions

- How do I create a Python module?

- How do I import a local module into my code?

Objectives

- Create a Python module with multiple files

- Import functions from a local module into a script

Project Organization

In order to keep our project organized, we’ll start by creating some directories to put our code in. So that we can keep the “source” code of our project separate from other aspects, we’ll start by creating a directory called “src”. In this directory, we’ll create a second directory with the name of our module, in this case “textanalysis-tool”. We can also delete the “main.py” file that was generated automatically by uv. Your project folder should now look like this:

textanalysis-tool/

├── src/

│ └── textanalysis_tool/

├── .gitignore

├── .python-version

├── pyproject.toml

└── README.mdNote that the interior folder has an underscore instead of a hyphen.

We will import the module using the name of the interior folder,

textanalysis_tool. This is important as hyphens are not a

valid character in Python module names.

Next, we’ll create a file called __init__.py in the

src/textanalysis_tool directory. This file will make Python

treat the directory as a package. Next to the __init__.py

file, we can create other Python files that will contain the code for

our module.

The __init__.py file is a special filename in python

that indicated that the directory should be treated as a package. Often

these files are simply blank, however we can also include some

additional code to initialize the package or set up any necessary

imports, as we will see later.

Let’s create a code file now, called say_hello.py and

put a simple function in it:

Our project folder should now look like this:

textanalysis-tool/

├── src/

│ └── textanalysis_tool/

│ ├── __init__.py

│ └── say_hello.py

├── .gitignore

├── .python-version

├── pyproject.toml

└── README.mdPreviewing Our Module

It’s all well and good to write some code in here, but how can we actually use it? Let’s create a python script to test our module.

Let’s create a directory called “tests”, and start a new file called

test_say_hello.py in it.

Add the following code to it:

PYTHON

import textanalysis_tool

result = textanalysis_tool.say_hello.hello("My Name")

if result == "Hello, My Name!":

print("Test passed!")

else:

print("Test failed!")One of the really nice things about using uv is that we

can replace python in our commands with uv run

and it will use the environment we have created for the project to run

the code. At the moment, this doesn’t make a difference, but once we

start adding dependencies to our project we’ll see how useful this

is.

Let’s run the script from our command line. If you’re in the root

directory of the project, your command will look something like

uv run tests/test_say_hello.py.

Aaaand… It doesn’t work!

PYTHON

D:\Documents\Projects\textanalysis-tool>uv run tests/test_say_hello.py

Traceback (most recent call last):

File "D:\Documents\Projects\textanalysis-tool\tests\test_say_hello.py", line 1, in <module>

from textanalysis_tool.say_hello import hello

ModuleNotFoundError: No module named 'textanalysis_tool'The reason for this is that we never actually told python where it can find our code!

The Python PATH

When you run a command like import pandas, what python

actually does is search the a series of directories in order looking for

a module file called pandas.py. We can see what directories

will be checked by printing the sys.path variable.

We are only interested in checking our current code, not in

installing it as a package. However because we have the

__init__.py file in our package directory, if we add the

exact or relative path to our package directory to our

sys.path variable, python will look there for modules as

well.

Let’s add a quick line to the top of our testing script to add our specific module directory to the path:

PYTHON

import sys

sys.path.insert(0, "./src")

from textanalysis_tool.say_hello import hello

result = hello("My Name")

if result == "Hello, My Name!":

print("Test passed!")

else:

print("Test failed!")This time it works! And if we modify the hello function

to print out something slightly different, we can see that running the

script changes the output right away!

We used sys.path.insert instead of

sys.path.append because we want to give our module

directory the highest priority when searching for modules. This way, if

there are any naming conflicts with other installed packages, our local

module will be found first.

Ideally you would never be working with a package name that has a conflict elsewhere in the path directories, but just in case, this avoids some potential issues.

Dot Notation in Imports

You probably noticed that our function call mimics the file and

directory structure of the project. We have the project directory

(textanalysis_tool), then the filename

(say_hello), and finally the function name

(hello). Python treats all of these similarly when trying

to locate a function or module. We can also modify our import to make

the code a little neater:

PYTHON

import sys

sys.path.insert(0, "./src")

from textanalysis_tool import say_hello

result = say_hello.hello("My Name")

if result == "Hello, My Name!":

print("Test passed!")

else:

print("Test failed!")or even better:

PYTHON

import sys

sys.path.insert(0, "./src")

from textanalysis_tool.say_hello import hello

result = hello("My Name")

if result == "Hello, My Name!":

print("Test passed!")

else:

print("Test failed!")Fos simplicity here, we are using the

from ... import ... syntax to import only the function we

need from the module. This way we don’t have to include the module name

every time we call the function.

This is a common practice in python, however the absolute best practice would be to import the entire module and then call the function with the module name, as in the first example. This way we avoid potential naming conflicts with functions from other modules, as well as providing clarity about where the function is coming from when reading the code.

The init.py File

We can see that in order to import our function, we have to include

both the name of the module and the name of the file before specifying

the name of the function. Sometimes this can get tedious, especially if

there is a directory in our project with lots of files and different

functions in each file. This is where we can simplify things a little by

adding a tiny bit of code to our __init__.py file:

We can run our testing script just the same way as before and it will

still work, but we can also now leave out the .say_hello

part:

Git Add / Commit

Before we forget, now that we have some simple code up and running, let’s add it to our git repository.

Challenge 1: Add a Sub Module

Create a directory under src/textanalysis_tool called

greetings and add a file called greet.py with

a function called greet that prints out the following:

Hello {User}!

It's nice to meet you!How do you call this function from your testing script?

You could also include this line in our existing

__init__.py file:

or add another __init__.py and include the following

line:

Challenge 2: Inter-Module imports

Building on the last challenge, the first line of our greeting is

identical to the output from our hello function. How can we

avoid code duplication by calling the hello function from

within our greet function?

Why are you bothering to write out the entire module path in greetings.py? Can’t you just do this:

Yes, you absolutely can! The dot-notation used in python pathing can

use .. to refer to the parent directory, or even

... to refer to a grandparent directory.

The reason we’re not doing this here is for clarity, as recommended in PEP8.

- Python modules are simply directories with an

__init__.pyfile in them - You can add the path to your module directory to

sys.pathto make it available for import - You can use dot notation in your imports to specify the module, file, and function you want to use

Content from Class Objects

Last updated on 2025-09-28 | Edit this page

Overview

Questions

- What is a class object?

- How can I defined a class object in Python?

- How can I use a class object in my module?

Objectives

- Create a Class object in our module.

- Demonstrate how to use our Class object in a sample script.

What is a Class Object?

You can think of a class object as a kind of “blueprint” for an object. It defines what properties the object can have, and what methods it can perform. Once a class is defined, you can create any number of objects based on that class, each of which is referred to as an “instance” of that class.

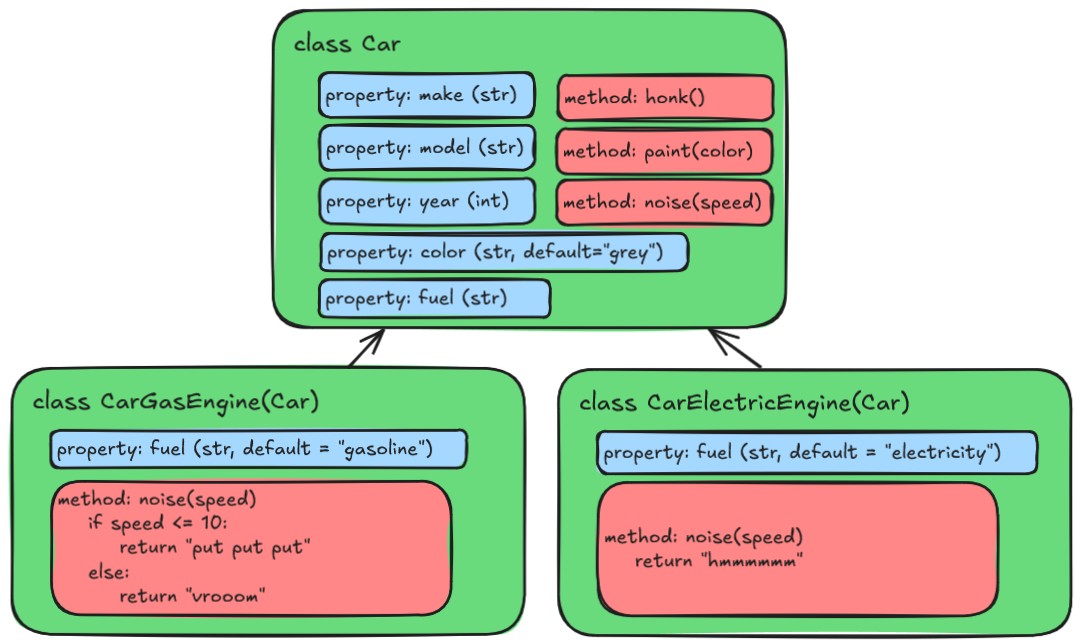

As an example, let’s imagine a Car. A Car has many properties and can do many things, but for our purposes, let’s limit them slightly. Our Car will have a make, model, year, and color, and it will be able to honk, and be painted.

The make, model, year, and color are all “properties” of the car. Honking a horn and being painted are both “methods” of the car. Here’s a diagram of our car object:

In python we can define a class object like this:

PYTHON

class Car:

def __init__(self, make: str, model: str, year: int, color: str = "grey", fuel: str = "gasoline"):

self.make = make

self.model = model

self.year = year

self.color = color

self.fuel = fuel

def honk(self) -> str:

return "beep"

def paint(self, new_color: str) -> None:

self.color = new_color

def noise(self, speed: int) -> str:

if speed <= 10:

return "putt putt"

else:

return "vrooom"The convention in python is that all classes should be named in CamelCase, with no underscores. There are no limits enforced by the interpreter but it is good practice to follow the standards of the python community.

Some of this might look familiar if you think about how we define

functions in Python. There’s a def keyword, followed by the

function name and parentheses. Inside the parentheses, we can define

parameters, and these parameters can contain default values. We can also

include type hints, for both parameters and return values. However all

of this is indented one level, underneath the class

keyword, which is followed by our class name.

Note that this is just our blueprint - it doesn’t refer to any

specific car, just the general idea of a car. Also note the

__init__ method. This is a special method which is called

whenever you “instantiate” a new object. The parameters for this

function are supplied when we first create an object and function

similarly to a method, in that if no default value is provided, it is

required in order to create the object, and if a default value is

provided, it is optional.

An instance of a car, in this case called “my_car” might look something like this:

What exactly is “an instance”?

An instance is how we refer to a specific object that has been created from a class. The class is the “blueprint”, while the instance is the actual object that is created based on that blueprint.

In our example, my_car is an instance of the

Car class. It has its own specific values for the

properties defined in the class (make, model, year, color), and it can

use the methods defined in the class (honk, paint).

Also note that each of the methods within the class object definition

starts with a “self” argument. This is a reference to the current

instance of the class, and is used to access variables that belong to

the class. In our example, we store the make, model, year and color as

properties of the class. When we call the paint method, we

use self.color to refer to the current instance’s color

property.

The __init__ method is called a “dunder” (double

underlined) method in python. There are a number of other dunder methods

that we can define, that will interact with various built-in functions

and operators. For example, we can define a __str__ method,

that will allow us to specify how our object should be represented as a

string when we call str() on it. Likewise, we can define

__eq__, which would tell python how to behave when we

compare two objects for equality.

A Class object for Our project

Let’s create a class object for our text analysis project. We’re going to be downloading some books from Project Gutenberg. To make things easy to begin with, we’ll limit ourselves to just the .txt files.

Since we’re going to create some useful objects and methods for

working with documents, let’s define a Document class.

Take a look a an example txt document from Project Gutenberg: Meditations, by Marcus Aurelius

What properties and methods might we want to include in our Document class?

It looks like there’s a standard metadata section in these documents, with a Title, Author, Release Date, Language, and Credits. Those will probably be useful metadata. Looking at the url for this file, it also looks like there’s an ID on Project Gutenberg.

For methods, we’ll need to be able to read the document from a file. And for some simple methods, let’s count the number of lines in a document, and another method to get the number of times a particular word appears.

Lets start writing our class object in a new file:

src/textanalysis_tool/document.py:

PYTHON

class Document:

def __init__(self, filepath: str, title: str, author: str = "", id: int = 0):

self.filepath = filepath

self.title = title

self.author = author

self.id = id

self.content = self.read(self.filepath)

def read(self, filepath: str) -> None:

with open(filepath, 'r', encoding='utf-8') as file:

return file.read()

def get_line_count(self) -> int:

return len(self.content.splitlines())

def get_word_occurrence(self, word: str) -> int:

return self.content.lower().count(word.lower())Our class object Document is a “blueprint” for a

collection of methods. When we define it, we have to provide the class

with a filepath, a title, an author, and an id. Only the filepath and

the title are required, while the author and id are optional.

The __init__ method is called as soon as the object is

created, and we can see that in addition to storing the parameters to

their self counterparts, there is an additional property

called self.content. This property is used to store the

entire text content of the document. We obtain this by calling the

self.read method, which reads the content from the

specified file.

Principle of Least Astonishment (or, We’re All Adults Here)

Unlike other programming languages, python doesn’t have the concept

of “private” or “internal” variables and methods. Instead there is a

convention which says that any variable or method that is intended for

internal use should be prefixed with an underscore

(e.g. content). This is however just a convention - there

is nothing stopping you from accessing these variables and methods from

outside the class if you really want to.

There are also two methods that we’ve defined -

get_line_count and get_word_occurrence.

Neither of these will be called directly on the class itself, but rather

on instances of the class that we create (as indicated by the use of

self within the class methods). Note that these methods

make use of the self.content property - this is a variable

that is not defined within the method, so you may expect it to be out of

scope. However the self keyword refers to the specific

instance of the class itself, and so it has access to all of its

properties and methods, including the self.content

property.

Trying out Our Class Object

Let’s try out our new class object. Create a file in our “tests”

directory called example_file.txt and add some text to

it:

This is a test document. It contains words.

It is only a test document.Next, let’s create another test file. Our last one was called

test_say_hello.py, so let’s call this

test_document.py:

PYTHON

import sys

sys.path.insert(0, "./src")

from textanalysis_tool.document import Document

total_tests = 3

passed_tests = 0

failed_tests = 0

# Check that we can create a Document object

doc = Document(filepath="tests/example_file.txt", title="Test Document")

if doc.title == "Test Document" and doc.filepath == "tests/example_file.txt":

passed_tests += 1

else:

failed_tests += 1

# Test the methods

if doc.get_line_count() == 2:

passed_tests += 1

else:

failed_tests += 1

if doc.get_word_occurrence("test") == 2:

passed_tests += 1

else:

failed_tests += 1

print(f"Total tests: {total_tests}")

print(f"Passed tests: {passed_tests}")

print(f"Failed tests: {failed_tests}")Now we’ll run this file using our uv environment:

You should see the output:

Total tests: 3

Passed tests: 3

Failed tests: 0Challenge 1: Identify the mistake

The following code is supposed to define a Bird class

that inherits from the Animal class and overrides the

whoami method to provide a specialized message. However,

there is a mistake in the code that prevents it from working as

intended. Can you identify and fix the mistake?

When we try to run the code we get the following error:

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

Cell In[7], line 14

11 return f"I am a bird. My name is irrelevant."

13 animal = Bird("boo")

---> 14 animal.whoami()

TypeError: Bird.whoami() takes 0 positional arguments but 1 was givenWe have forgotten to include the self parameter in the

whoami method of the Bird class. The

self parameter is required for instance methods in Python,

as it refers to the instance of the class. Without it, the method cannot

access instance properties or methods.

Challenge 2: The str Method

In our examples so far, we define an __init__ method for

our class objects. This is a special kind of method called a “dunder”

(double underlined) method. There are a number of other dunder methods

that we can define, that will interact with various built-in functions

and operators.

Try to define a __str__ method for the following class

object that will create the following output when run:

PYTHON

class Animal:

def __init__(self, name: str):

print(f"Creating an animal named {name}")

self.name = name

# Your __str__ method here

animal = Animal("Moose")

print(str(animal))output:

Creating an animal named Moose

I am an animal named Moose.Challenge 3: Static Methods

In addition to instance methods, which operate on an instance of a

class (and so have self as the first parameter), we can

also define static methods. These are methods that don’t operate on an

instance of the class, and so don’t have self as the first

parameter. Instead, they are defined using the

@staticmethod decorator.

Create a static method called is_animal that takes a

single parameter, obj, and returns True if

obj is an instance of the Animal class, and

False otherwise.

A decorator is a special kind of function that modifies the behavior

of another function. They are defined using the @ symbol,

followed by the name of the decorator function. In this case, we use the

@staticmethod decorator to indicate that the following

method is a static method, so this line must be placed directly above

the method definition.

You can use the python built-in function isinstance to

check if an object is an instance of a class. (https://docs.python.org/3/library/functions.html#isinstance)

Challenge 4: Testing Out Our Class on Real Data

Let’s download a real text file from Project Gutenberg and see how our class object handles it. You can pick any file you like, or you can use the same one we looked at earlier: Meditations, by Marcus Aurelius.

Modify your test_document.py file to create a new

Document object using the real text file, and then test out the

get_line_count and get_word_occurrence methods

on it. What do you get? What issues might there be when we start using

this class on our actual data?

How can we improve our class to handle these issues?

The Metadata at the start of the document is always gated by a line

that says

*** START OF THE PROJECT GUTENBERG EBOOK {the title of the book} ***

and at the end of the document with the line

*** END OF THE PROJECT GUTENBERG EBOOK {the title of the book} ***.

We need some way to extract all of the content between these two markers…

There is a python module called re that allows us to

work with regular expressions. This can be used to match specific

patterns in text, or for extracting specific parts of a string. You can

use the following regex pattern to match the content between the start

and end markers:

The Project Gutenberg text files have a lot of metadata at the start

and end of the file, which will affect the line count and word

occurrence counts. We might want to modify our self.read

method to strip out this metadata before storing the content in

self.content.

One possible solution would look like this:

PYTHON

import re

class Document:

CONTENT_PATTERN = r"\*\*\* START OF THE PROJECT GUTENBERG EBOOK .*? \*\*\*(.*?)\*\*\* END OF THE PROJECT GUTENBERG EBOOK .*? \*\*\*"

def __init__(self, filepath: str, title: str = "", author: str = "", id: int = 0):

self.filepath = filepath

self.content = self.get_content(filepath)

self.title = title

self.author = author

self.id = id

def get_content(self, filepath: str) -> str:

raw_text = self.read(filepath)

match = re.search(self.CONTENT_PATTERN, raw_text, re.DOTALL)

if match:

return match.group(1).strip()

raise ValueError(f"File {filepath} is not a valid Project Gutenberg Text file.")

def read(self, file_path: str) -> None:

with open(file_path, "r", encoding="utf-8") as file:

return file.read()

def get_line_count(self) -> int:

return len(self.content.splitlines())

def get_word_occurrence(self, word: str) -> int:

return self.content.lower().count(word.lower())Note that our test file now fails, because the CONTENT_PATTERN

doesn’t match anything in our example_file.txt. We could

modify our test file to use a real Project Gutenberg text file:

*** START OF THE PROJECT GUTENBERG EBOOK TEST ***

This is a test document. It contains words.

It is only a test document.

*** END OF THE PROJECT GUTENBERG EBOOK TEST ***Or we could modify our class to allow for a different content pattern to be specified when creating the object:

Neat! We’ve successfully created and used a class object in our

module. But certainly there’s a better way to test this, right? In the

next episode, we’ll look at how to write proper unit tests for our class

object with pytest.

- Python classes are defined using the

classkeyword, followed by the class name and a colon. - The

__init__method is a special method that is called when an instance of the class is created. - Class methods are defined like normal functions, but they must

include

selfas the first parameter.

Content from Unit Testing

Last updated on 2025-09-28 | Edit this page

Overview

Questions

- What is unit testing?

- Why is unit testing important?

- How do you write a unit test in Python?

Objectives

- Explain the concept of unit testing

- Demonstrate how to write and run unit tests in Python using

pytest

Unit Testing

We have our two little test files, but you might imagine that it’s not particularly efficient to always write individual scripts to test our code. What if we had a lot of functions or classes? Or a lot of different ideas to test? What if our objects changed down the line? Our existing modules are going to be difficult to maintain, and, as you may have expected, there is already a solution for this problem.

What Makes For a Good Test?

A good test is one that is: - Isolated: A good test should be able to run independently of other tests, and should not rely on external resources such as databases or web services. - Repeatable: A good test should produce the same results every time it is run. - Fast: A good test should run quickly, so that it can be run frequently during development. - Clear: A good test should be easy to understand, so that other developers can easily see what is being tested and why. Not just in the output provided by the test, but the variables, function names, and structure of the test should be clear and descriptive.

It can be easy to get carried away with testing everything and writing tests that cover every single case that you can come up with. However having a massive test suite that is cumbersome to update and takes a long time to run is not useful. A good rule of thumb is to focus on testing the most important parts of your code, and the parts that are most likely to break. This often means focusing on edge cases and error handling, rather than trying to test every possible input.

Generally, a good test module will test the basic functionality of an object or function, as well as a few edge cases.

What are some edge cases that you can think of for the

hello function we wrote earlier?

What about the Document class?

Some edge cases for the hello function could

include:

- Passing in an empty string

- Passing in a very long string

- Passing in something that is not a string (e.g. a number or a list)

Some edge cases for the Document class could

include:

- Passing in a file path that does not exist

- Passing in a file that is not a text file

- Passing in a file that is empty

- Passing in a word that does not exist in the document when testing

get_word_occurrence

pytest

pytest is a testing framework for Python that helps to

write simple and scalable test cases. It is widely used in the python

community, and has in-depth and comprehensive documentation. We won’t be

getting into all of the different things you can do with pytest, but we

will cover the basics here.

To start off with, we need to add pytest to our environment. However

unlike our previous packages, pytest is not required for

our module to work, it is only used by us as we are writing code.

Therefore it is a “development dependency”. We can still add this to our

pyproject.toml file via uv, but we need to add a special

flag to our command so that it goes in the correct place.

If you open up your pyproject.toml file, you should see

that pytest has been added under a new called “dependency

groups” (your version number may be different):

Now we can start creating our tests.

Writing a pytest Test File

Part of pytest is the concept of “test discovery”. This

means that pytest will automatically find any test files that follow a

certain naming convention. By default, pytest will look for files that

start with test_ or end with _test.py. Inside

these files, pytest will look for functions that start with

test_.

Now, our files already have the correct names, so we just need to

change the contents. Let’s start with test_say_hello.py.

Open it up and replace the contents with the following:

PYTHON

from textanalysis_tool.say_hello import hello

def test_hello():

assert hello("My Name") == "Hello, My Name!"In our previous test file, we had to add the path to our module each

time. Now that we are using pytest, we can use a special

file called conftest.py to add this path automatically.

Create a file called conftest.py in the tests

directory and add the following code to it:

Now, we just need to run the tests. We can do this with the following command:

Note that we are using uv run to run pytest, this

ensures that pytest is run in the correct environment with all the

dependencies we have installed.

You should see output similar to the following:

============================= test session starts ==============================

platform win32 -- Python 3.13.7, pytest-8.4.2, pluggy-1.6.0

rootdir: D:\Documents\Projects\textanalysis-tool

configfile: pyproject.toml

collected 1 item

tests\test_say_hello.py . [100%]

============================== 1 passed in 0.12s ===============================Why didn’t it run the other test file? Because even though the file

is named correctly, it doesn’t contain any functions that start with

test_. Let’s fix that now.

Open up test_document.py and replace the contents with

the following:

PYTHON

from textanalysis_tool.document import Document

def test_create_document():

Document.CONTENT_PATTERN = r"(.*)"

doc = Document(filepath="tests/example_file.txt")

assert doc.filepath == "tests/example_file.txt"

def test_document_word_count():

Document.CONTENT_PATTERN = r"(.*)"

doc = Document(filepath="tests/example_file.txt")

assert doc.get_line_count() == 2

def test_document_word_occurrence():

Document.CONTENT_PATTERN = r"(.*)"

doc = Document(filepath="tests/example_file.txt")

assert doc.get_word_occurrence("test") == 2Our example file doesn’t exactly look like a Project Gutenberg text

file, so we need to change the CONTENT_PATTERN to match

everything. This is a class level variable, so we can change it on the

class itself, rather than on the instance.

Let’s run our tests again:

You should see output similar to the following:

============================= test session starts ==============================

platform win32 -- Python 3.13.7, pytest-8.4.2, pluggy-1.6.0

rootdir: D:\Documents\Projects\textanalysis-tool

configfile: pyproject.toml

collected 4 items

tests\test_document.py ... [ 75%]

tests\test_say_hello.py . [100%]

============================== 4 passed in 0.15s ===============================You can see that all of the tests have passed. There is a small green

pip for each test that was performed, and a summary at the end. Compare

this to the test file we had before. We got rid of all of the if

statements, and just use the assert statement to check if

the output is what we expect.

Testing Edge Cases / Exceptions

Let’s add an edge case to our tests. Open up

test_say_hello.py and add a test case for an empty

string:

PYTHON

from textanalysis_tool.say_hello import hello

def test_hello():

assert hello("My Name") == "Hello, My Name!"

def test_hello_empty_string():

assert hello("") == "Hello, !"Run the tests again:

We get passing tests, which is what we expect. But we are the ones in

charge of the function, what if we say that if the user doesn’t provide

a name, we want to raise an exception? Let’s change the test to say that

if the user provides an empty string, we want to raise a

ValueError:

PYTHON

import pytest

from textanalysis_tool.say_hello import hello

def test_hello():

assert hello("My Name") == "Hello, My Name!"

def test_hello_empty_string():

with pytest.raises(ValueError):

hello("")Run the tests again:

This time, we get a failing test, because the hello

function DID NOT raise a ValueError. Let’s change the

hello function to raise a ValueError if the

name is an empty string:

PYTHON

def hello(name: str = "User"):

if name == "":

raise ValueError("Name cannot be empty")

return f"Hello, {name}!"Running the tests again, we can see that all the tests pass.

Fixtures

One of the great features of pytest is the ability to use fixtures.

Fixtures are a way to provide data, state or configurations to your

tests. For example, we have a line in each of our tests that creates a

new Document object. We can use a fixture to create this

object once, and then use it in each of our tests. That way, if we need

to change the way we create the object in the future, we only need to

change it in one place.

Let’s create a fixture for our Document object. Open up

test_document.py and add the following import at the

top:

Then, add the following code below the imports:

Now, we can use this fixture in our tests. Update the test functions

to accept a parameter called doc, and remove the line that

creates the Document object. The updated test file should

look like this:

PYTHON

import pytest

from textanalysis_tool.document import Document

@pytest.fixture

def doc():

Document.CONTENT_PATTERN = r"(.*)"

return Document(filepath="tests/example_file.txt")

def test_create_document(doc):

assert doc.filepath == "tests/example_file.txt"

def test_document_word_count(doc):

assert doc.get_line_count() == 2

def test_document_word_occurrence(doc):

assert doc.get_word_occurrence("test") == 2Because our Documents are validated by searching for a starting and

ending regex pattern, our test files will not have that. We could ensure

that our test files would, or we can just temporarily alter the search

pattern for the duration of the test. CONTENT_PATTERN is a

class level variable, so we need to modify it before the instance is

created.

Let’s run our tests again. Nothing changed in the output, but our code is now cleaner and easier to maintain.

Monkey Patching

Another useful feature of pytest is monkey patching. Monkey patching is a way to modify or extend the behavior of a function or class during testing. This is useful when you want to test a function that depends on an external resource, such as a database, file system or web resource. Instead of actually accessing the external resource, you can use monkey patching to replace the function that accesses the resource with a mock function that returns a predefined value.

In our use case, we have a file called example_file.txt

that we use to test our Document class. However, if we

wanted to test the Document class with files that have

different contents, would need to create a whole array of different test

files. Instead, we can use monkey patching to replace the

open function, so that instead of actually opening a file,

it returns a string that we define.

Let’s monkey patch the open function in our

test_document.py file. First, we need to import the

monkeypatch fixture from unittest.mock (a

python built-in module). Add the following import at the top of the

file:

Then, we can create a new fixture that monkey patches the

open function. Add the following code below the

doc fixture:

PYTHON

@pytest.fixture(autouse=True)

def mock_file(monkeypatch):

mock = mock_open(read_data="This is a test document. It contains words.\nIt is only a test document.")

monkeypatch.setattr("builtins.open", mock)

return mockThe other difference you’ll notice is that we added the parameter

autouse=True to the fixture. This means that, within this

test file, this specific fixture will be automatically applied to all

tests, without needing to explicitly include it as a parameter in each

test function.

Go ahead and delete the example_file.txt file, and run

the tests again. Your tests should still pass, even though the file

doesn’t exist anymore. This is because we are using monkey patching to

replace the open function with a mock function that returns

the string we defined.

Edge Cases Again / Test Driven Development

Let’s go back and think about other things that might go wrong with

our Document class. What if the user provides a file path

that doesn’t exist? What if the user provides a file that is not a text

file? Or a file that is empty of content? Rather than write these into

our class object, we can first write tests that will check for the

behavior we expect or want in these edge cases, see if they fail, and

then update our class object to make the tests pass. This is called

“Test Driven Development” (TDD), and is a common practice in software

development.

Let’s add a test for a file that is empty. In this case, we would

want the initialization of the object to fail with a

ValueError. However for this test, we can’t use our

fixtures from above, so we’ll have to code it into the test. Add the

following to test_document.py:

PYTHON

def test_empty_file(monkeypatch):

# Mock an empty file

mock = mock_open(read_data="")

monkeypatch.setattr("builtins.open", mock)

with pytest.raises(ValueError):

Document(filepath="empty_file.txt")Because we are monkeypatching the open function, we

don’t actually need to have a file called empty_file.txt in

our tests directory. The open function will be replaced

with our mock function that returns an empty string. We are providing a

file name here to be consistent with the Document class

initialization, and we are using the name to act as additional

information for later developers to clarify the intent of the test.

Run the tests again:

It fails, as we expect. Now, let’s update the Document

class to raise a ValueError if the file is empty. Open up

document.py and update the get_content method

to the following:

PYTHON

...

def get_content(self, filepath: str) -> str:

raw_text = self.read(filepath)

if not raw_text:

raise ValueError(f"File {filepath} contains no content.")

match = re.search(self.CONTENT_PATTERN, raw_text, re.DOTALL)

if match:

return match.group(1).strip()

raise ValueError(f"File {filepath} is not a valid Project Gutenberg Text file.")

...Challenge 1: Write a simple test

Create a file called text_utilities.py in the

src/textanalysis_tool directory. In this file, paste the

following function:

PYTHON

def create_acronym(phrase: str) -> str:

"""Create an acronym from a phrase.

Args:

phrase (str): The phrase to create an acronym from.

Returns:

str: The acronym.

"""

if not isinstance(phrase, str):

raise TypeError("Phrase must be a string.")

words = phrase.split()

if len(words) == 0:

raise ValueError("Phrase must contain at least one word.")

articles = {"a", "an", "the", "and", "but", "or", "nor", "on", "at", "to", "by", "in"}

acronym = ""

for word in words:

if word.lower() not in articles:

acronym += word[0].upper()

return acronymCreate the following test cases for this function:

- A test that checks if the acronym for “As Soon As Possible” is “ASAP” and that the acronym for “For Your Information” is “FYI”.

- A test that checks that the function raises a

TypeErrorwhen the input is not a string. - A test that checks that the function raises a

ValueErrorwhen the input is an empty string.

Are there any other edge cases you can think of? Write a test to prove that your edge case is not handled by this function as it is currently written.

Remember that to use pytest, you need to create a file that starts

with test_ and that the test functions need to start with

test_ as well.

What happens if the phrase contains only articles? For example, “and the or by”?

in the tests directory, create a file called

test_text_utilities.py:

PYTHON

import pytest

from textanalysis_tool.text_utilities import create_acronym

def test_create_acronym():

assert create_acronym("As Soon As Possible") == "ASAP"

assert create_acronym("For Your Information") == "FYI"

def test_create_acronym_invalid_type():

with pytest.raises(TypeError):

create_acronym(123)

def test_create_acronym_empty_string():

with pytest.raises(ValueError):

create_acronym("")

def test_create_acronym_no_valid_words():

with pytest.raises(ValueError):

create_acronym("and the or")Run the tests with uv run pytest.

In the create_acronym function, we need to add a check

after we finish iterating through the words to see if the acronym is

empty. If it is, we can raise a ValueError:

Challenge 2: Additional Edge Case

Try adding a test for another edge case for our Document class, this

time for a file that is not actually a text file, for example, a binary

file or an image file. Then, update the Document class to

make the test pass.

You can mock a binary file by using the mock_open

function from the unittest.mock module, and using the

read_data parameter to provide binary data like

b'\x00\x01\x02'.

In the Document class, we need to check if the data read

from the file is binary data. The read method of a file

object is clever enough to return binary data as a bytes

object, so we can check if the data in self._content is an

instance of type bytes. If it is, we can raise a

ValueError.

You can create a test that simulates opening a binary file by using

the mock_open function from the unittest.mock

module. Here’s an example of how you might write such a test:

PYTHON

def test_binary_file(monkeypatch):

# Mock a binary file

mock = mock_open(read_data=b'\x00\x01\x02')

monkeypatch.setattr("builtins.open", mock)

with pytest.raises(ValueError):

Document(filepath="binary_file.bin")And then, in the Document class, you can check if the

data read from the file is binary data like this:

PYTHON

...

def get_content(self, filepath: str) -> str:

raw_text = self.read(filepath)

if not raw_text:

raise ValueError(f"File {filepath} contains no content.")

if isinstance(raw_text, bytes):

raise ValueError(f"File {self.filepath} is not a valid text file.")

match = re.search(self.CONTENT_PATTERN, raw_text, re.DOTALL)

if match:

return match.group(1).strip()

raise ValueError(f"File {filepath} is not a valid Project Gutenberg Text file.")

...- We can use

pytestto write and run unit tests in Python. - A good test is isolated, repeatable, fast, and clear.

- We can use fixtures to provide data or state to our tests.

- We can use monkey patching to modify the behavior of functions or classes during testing.

- Test Driven Development (TDD) is a practice where we write tests before writing the code to make the tests pass.

Content from Extending Classes with Inheritance

Last updated on 2025-09-28 | Edit this page

Overview

Questions

- What if we want classes that are similar, but handle slightly different cases?

- How can we avoid duplicating code in our classes?

Objectives

- Explain the concept of inheritance in object-oriented programming

- Demonstrate how to create a subclass that inherits from a parent class

- Show how to override methods and properties in a subclass

Extending Classes with Inheritance

So far you may be wondering why classes are useful. After all, all we’ve really done in essence is make a tiny module with some functions in it that are slightly more complicated than normal functions. One of the real powers of classes is the ability to limit code duplicate through a concept called inheritance.

Inheritance

Inheritance is a way to create a new class that contains all of the same properties and methods as an existing class, but allows us to add additional new properties and methods, or to override existing methods. This allows us to create a new class that is a specialized version of an existing class, without having to rewrite a whole bunch of code.

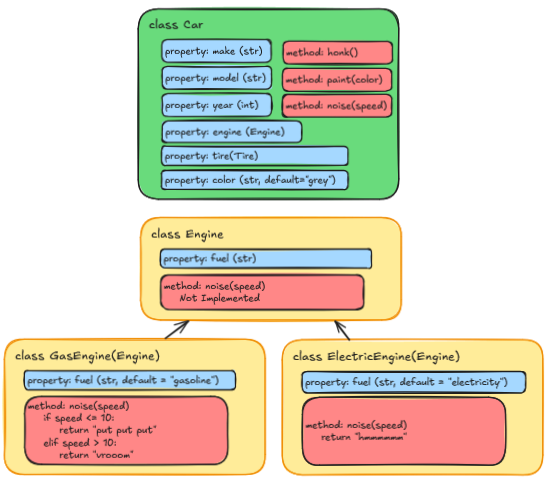

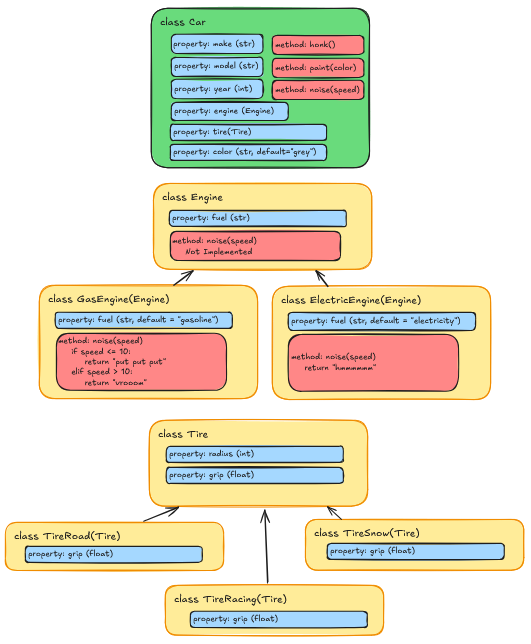

Taking a look at our Car class from earlier, we might want to create

a new class for a specific type of engine, like a Gas Engine or an

Electric Engine. Both kinds of cars will have the same basic properties

and methods, but they will also have some additional properties and

methods that are specific to the type of engine, or properties that are

set by default, like our fuel property.

But since both types of cars are still cars, they will share a lot of the same properties and methods. Rather than repeating all of the code from the Car class in both our new classes, we can use inheritance to create our new classes based on the Car class:

In python, this would look something like this:

PYTHON

class Car:

def __init__(self, make: str, model: str, year: int, color: str = "grey", fuel: str = "gasoline"):

self.make = make

self.model = model

self.year = year

self.color = color

self.fuel = fuel

def honk(self) -> str:

return "beep"

def paint(self, new_color: str) -> None:

self.color = new_color

def noise(self, speed: int) -> str:

if speed <= 10:

return "putt putt"

else:

return "vrooom"

class CarGasEngine(Car):

def __init__(self, make: str, model: str, year: int, color: str = "grey"):

super().__init__(make=make, model=model, year=year, color=color, fuel="gasoline")

class CarElectricEngine(Car):

def __init__(self, make: str, model: str, year: int, color: str = "grey"):

super().__init__(make=make, model=model, year=year, color=color, fuel="electric")

def noise(self, speed: int) -> str:

return "hmmmmmm"

Note that the noise method in the

CarElectricEngine class is overridden to provide a

different implementation than the one in the Car class.

This is called method overriding, and it allows us to define a different

behavior for a method in a subclass. When we call the noise

method on an instance of CarElectricEngine, it will use the

overridden method, rather than the one defined in the Car

class.

However in CarGasEngine, we do not override the

noise method, so it will use the one defined in the

Car class.

More on overriding methods in a moment.

You can see that the CarGasEngine class is defined in a similar way

to the Car class, but it inherits from the Car class by including it in

parentheses after the class name. The __init__ method of

the CarGasEngine class also has a call to

super().__init__(). The super() function is a

way to refer specifically to the parent class, in this case, the Car

class. This allows us to call the __init__ method of the

Car class, which sets up all of the properties that a Car has.

Applying Inheritance to Our Document Class

For our Document class, we have a few different types of

documents available from the Project Gutenberg website. We are currently

using plain text files, but there are also HTML files that we can

download. They will have the same information, but the data within will

be structured in a slightly different way. We can use inheritance to

create a pair of new classes: HTMLDocument and

PlainTextDocument, that both inherit from the

Document class. This will allow us to keep all of the

common functionality in the Document class, but to add any

additional functionality specific to each document type.

Most of what we’ve written so far is specific to reading and parsing

data out of the plain text files, so almost all of the code from

Document can be copied. We’ll leave the functions for

gutenberg_url, get_line_count, and

get_word_occurrence.

In addition, we’ll need an __init__ in our

Document class. At the moment, all it does is save the

filename in the filename property, but we might expand this

in the future. We’ll also need a reference to the

super().__init__() in our PlainTextDocument.

At the moment, our classes look like this:

PYTHON

class Document:

@property

def gutenberg_url(self) -> str | None:

if self.id:

return f"https://www.gutenberg.org/cache/epub/{self.id}/pg{self.id}.txt"

return None

def __init__(self, filepath: str):

self.filepath = filepath

def get_line_count(self) -> int:

return len(self._content.splitlines())

def get_word_occurrence(self, word: str) -> int:

return self._content.lower().count(word.lower())PYTHON

import re

from textanalysis_tool.document import Document

class PlainTextDocument(Document):

TITLE_PATTERN = r"^Title:\s*(.*?)\s*$"

AUTHOR_PATTERN = r"^Author:\s*(.*?)\s*$"

ID_PATTERN = r"^Release date:\s*.*?\[eBook #(\d+)\]"

CONTENT_PATTERN = r"\*\*\* START OF THE PROJECT GUTENBERG EBOOK .*? \*\*\*(.*?)\*\*\* END OF THE PROJECT GUTENBERG EBOOK .*? \*\*\*"

def __init__(self, filepath: str):

super().__init__(filepath=filepath)

def _extract_metadata_element(self, pattern: str, text: str) -> str | None:

match = re.search(pattern, text, re.MULTILINE)

return match.group(1).strip() if match else None

def get_content(self, filepath: str) -> str:

raw_text = self.read(filepath)

match = re.search(self.CONTENT_PATTERN, raw_text, re.DOTALL)

if match:

return match.group(1).strip()

raise ValueError(f"File {filepath} is not a valid Project Gutenberg Text file.")

def get_metadata(self, filepath: str) -> dict:

raw_text = self.read(filepath)

title = self._extract_metadata_element(self.TITLE_PATTERN, raw_text)

author = self._extract_metadata_element(self.AUTHOR_PATTERN, raw_text)

extracted_id = self._extract_metadata_element(self.ID_PATTERN, raw_text)

return {

"title": title,

"author": author,

"id": int(extracted_id) if extracted_id else None,

}

def read(self, file_path: str) -> None:

with open(file_path, "r", encoding="utf-8") as file:

raw_text = file.read()

if not raw_text:

raise ValueError(f"File {self.filepath} contains no content.")

if isinstance(raw_text, bytes):

raise ValueError(f"File {self.filepath} is not a valid text file.")

return raw_textWe’ll also have another class for reading HTML files. This will be similar to the ´PlainTextDocument´ class, but it will use the ´BeautifulSoup´ library to parse the HTML file and extract the content and metadata. Rather than type out the entire class now, you can either copy and paste the code below into a new file called ´src/textanalysis_tool/html_document.py´, or you can download the file from the Workshop Resources.

As we do not have BeautifulSoup in our environment yet, you will need

to add it using uv:

uv add beautifulsoup4This will install the package to your environment as well as add it

to your pyproject.toml file.

import re

from bs4 import BeautifulSoup

from textanalysis_tool.document import Document

class HTMLDocument(Document):

URL_PATTERN = "^https://www.gutenberg.org/files/([0-9]+)/.*"

@property

def gutenberg_url(self) -> str | None:

if self.id:

return f"https://www.gutenberg.org/cache/epub/{self.id}/pg{self.id}-h.zip"

return None

def __init__(self, filepath: str):

super().__init__(filepath=filepath)

extracted_id = re.search(self.URL_PATTERN, self.metadata.get("url", ""), re.DOTALL)

self.id = int(extracted_id.group(1)) if extracted_id.group(1) else None

def read(self, filepath) -> BeautifulSoup:

with open(filepath, encoding="utf-8") as file_obj:

parsed_file = BeautifulSoup(file_obj, "html.parser")

# Check that the file is parsable as HTML

if not parsed_file or not parsed_file.find("h1"):

raise ValueError("The file could not be parsed as HTML.")

return parsed_file

def get_content(self, filepath: str) -> str:

parsed_file = self.read(filepath)

# Find the first h1 tag (The book title)

title_h1 = parsed_file.find("h1")

# Collect all the content after the first h1

content = []

for element in title_h1.find_next_siblings():

text = element.get_text(strip=True)

# Stop early if we hit this text, which indicate the end of the book

if "END OF THE PROJECT GUTENBERG EBOOK" in text:

break

if text:

content.append(text)

return "\n\n".join(content)

def get_metadata(self, filename) -> str:

parsed_file = self.read(filename)

title = parsed_file.find("meta", {"name": "dc.title"})["content"]

author = parsed_file.find("meta", {"name": "dc.creator"})["content"]

url = parsed_file.find("meta", {"name": "dcterms.source"})["content"]

extracted_id = re.search(self.URL_PATTERN, url, re.DOTALL)

id = int(extracted_id.group(1)) if extracted_id.group(1) else None

return {"title": title, "author": author, "id": id, "url": url}

Overriding Methods

Notice that in the HTMLDocument class, we have

overridden the gutenberg_url property to return the URL for

the HTML version of the book. This is an example of how we can override

methods and properties in a subclass to provide specialized behavior.

When we create an instance of HTMLDocument, it will use the

gutenberg_url property defined in the

HTMLDocument class, rather than the one defined in the

Document class.

When overriding methods, it’s important to ensure that the new method has the same signature as the method being overridden. This means that the new method should have the same name, number of parameters, and return type as the method being overridden.

Additionally, the __init__ is technically also an

overridden method, since it is defined in the parent class. However,

since we are calling the parent class’s __init__ method

using super(), we are not completely replacing the behavior

of the parent class’s __init__ method, but rather extending

it. We can do the exact same thing with other methods if we want to add

some functionality to an existing method, rather than completely

replacing it.

Testing our Inherited Classes

Now let’s try out our classes. We already have the

pg2680.txt file in our ´scratch´ folder, now let’s download

the HTML version of the same book from Project Gutenberg. You can

download it from this

link. (Note that the file is zipped, as it also contains images. We

won’t be using the images, but you’ll need to unzip the file to get to

the HTML file.) Once you have the HTML file, place it in the ´scratch´

folder alongside the ´pg2680.txt´ file.

You can either copy and paste the code below into a new file called

demo_inheritance.py, or you can download the file from the

Workshop Resources.

PYTHON

import sys

sys.path.insert(0, "src")

from textanalysis_tool.document import Document

from textanalysis_tool.plain_text_document import PlainTextDocument

from textanalysis_tool.html_document import HTMLDocument

# Test the PlainTextDocument class

plain_text_doc = PlainTextDocument(filepath="scratch/pg2680.txt")

print(f"Plain Text Document Title: {plain_text_doc.title}")

print(f"Plain Text Document Author: {plain_text_doc.author}")

print(f"Plain Text Document ID: {plain_text_doc.id}")

print(f"Plain Text Document Line Count: {plain_text_doc.line_count}")

print(f"Plain Text Document 'the' Occurrences: {plain_text_doc.get_word_occurrence('the')}")

print(f"Plain Text Document Gutenberg URL: {plain_text_doc.gutenberg_url}")

print(f"Type of Plain Text Document: {type(plain_text_doc)}")

print(f"Parent Class: {type(plain_text_doc).__bases__[0]}")

print("=" * 40)

# Test the HTMLDocument class

html_doc = HTMLDocument(filepath="scratch/pg2680-images.html")

print(f"HTML Document Title: {html_doc.title}")

print(f"HTML Document Author: {html_doc.author}")

print(f"HTML Document ID: {html_doc.id}")

print(f"HTML Document Line Count: {html_doc.line_count}")

print(f"HTML Document 'the' Occurrences: {html_doc.get_word_occurrence('the')}")

print(f"HTML Document Gutenberg URL: {html_doc.gutenberg_url}")

print(f"Type of HTML Document: {type(html_doc)}")

print(f"Parent Class: {type(html_doc).__bases__[0]}")

print("=" * 40)

# We can't use the Document class directly

doc = Document(filepath="scratch/pg2680.txt")You should get some output that looks like this:

Plain Text Document Title: Meditations

Plain Text Document Author: Emperor of Rome Marcus Aurelius

Plain Text Document ID: 2680

Plain Text Document Line Count: 6845

Plain Text Document 'the' Occurrences: 5736

Plain Text Document Gutenberg URL: https://www.gutenberg.org/cache/epub/2680/pg2680.txt

Type of Plain Text Document: <class 'textanalysis_tool.plain_text_document.PlainTextDocument'>

Parent Class: <class 'textanalysis_tool.document.Document'>

========================================

HTML Document Title: Meditations

HTML Document Author: Marcus Aurelius, Emperor of Rome, 121-180

HTML Document ID: 2680

HTML Document Line Count: 5635

HTML Document 'the' Occurrences: 6161

HTML Document Gutenberg URL: https://www.gutenberg.org/cache/epub/2680/pg2680-h.zip

Type of HTML Document: <class 'textanalysis_tool.html_document.HTMLDocument'>

========================================

Parent Class: <class 'textanalysis_tool.document.Document'>

Traceback (most recent call last):

File "E:\Projects\Python\scratch\textanalysis-tool\scratch\demo_inheritance.py", line 34, in <module>

doc = Document(filepath="scratch/pg2680.txt")

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "E:\Projects\Python\scratch\textanalysis-tool\src\textanalysis_tool\document.py", line 14, in __init__

self.content = self.get_content(filepath)

^^^^^^^^^^^^^^^^

AttributeError: 'Document' object has no attribute 'get_content'Note that the end of the script results in an error - since the

Document class is no longer contains the

get_content or get_metadata methods, it cannot

be used directly. However we don’t get an error until we try to call one

of those methods.

This is a use case for something called an abstract base class, which

is a class that is designed to be inherited from, but never instantiated

directly. One way to handle this would be to add these methods to the

Document class, but have them raise a

NotImplementedError. This way, if someone tries to

instantiate the Document class directly, they will get an

error indicating that maybe this class is not meant to be used

directly:

PYTHON

class Document:

@property

def gutenberg_url(self) -> str | None:

if self.id:

return f"https://www.gutenberg.org/cache/epub/{self.id}/pg{self.id}.txt"

return None

@property

def line_count(self) -> int:

return len(self.content.splitlines())

def __init__(self, filepath: str):

self.filepath = filepath

self.content = self.get_content(filepath)

metadata = self.get_metadata(filepath)

self.title = metadata.get("title")

self.author = metadata.get("author")

self.id = metadata.get("id")

def get_word_occurrence(self, word: str) -> int:

return self.content.lower().count(word.lower())

def get_content(self, filepath: str) -> str:

raise NotImplementedError("This method should be implemented by subclasses.")

def get_metadata(self, filepath: str) -> dict[str, str | None]:

raise NotImplementedError("This method should be implemented by subclasses.")Another way to handle this is to use the abc module from

the standard library, which provides a way to define abstract base

classes. This is a more formal way to define a class that is meant to be

inherited from, but not instantiated directly:

PYTHON

from abc import ABC, abstractmethod

class Document(ABC):

@property

def gutenberg_url(self) -> str | None:

if self.id:

return f"https://www.gutenberg.org/cache/epub/{self.id}/pg{self.id}.txt"

return None

@property

def line_count(self) -> int:

return len(self.content.splitlines())

def __init__(self, filepath: str):

self.filepath = filepath

self.content = self.get_content(filepath)

self.metadata = self.get_metadata(filepath)

self.title = self.metadata.get("title")

self.author = self.metadata.get("author")

self.id = self.metadata.get("id")

def get_word_occurrence(self, word: str) -> int:

return self.content.lower().count(word.lower())

@abstractmethod

def get_content(self, filepath: str) -> str:

pass

@abstractmethod

def get_metadata(self, filepath: str) -> dict[str, str | None]:

pass

:::

## Unit Testing

One of the first effects of this is that our `Document` class is no longer directly testable, since

it cannot be instantiated directly. However, we can still test the `PlainTextDocument` and

`HTMLDocument` classes, which will also indirectly test the `Document` class. You can either copy

the code below into two new files called `tests/test_plain_text_document.py` and

`tests/test_html_document.py`, or you can download the files from the [Workshop Resources](./workshop_resources.html).

(Also make sure to delete the existing `tests/test_document.py` file, since it is no longer

applicable.)

`tests/test_plain_text_document.py`

::: spoiler

```python

import pytest

from unittest.mock import mock_open

from textanalysis_tool.plain_text_document import PlainTextDocument

TEST_DATA = """

Title: Test Document

Author: Test Author

Release date: January 1, 2001 [eBook #1234]

Most recently updated: February 2, 2002

*** START OF THE PROJECT GUTENBERG EBOOK TEST ***

This is a test document. It contains words.

It is only a test document.

*** END OF THE PROJECT GUTENBERG EBOOK TEST ***

"""

@pytest.fixture(autouse=True)

def mock_file(monkeypatch):

mock = mock_open(read_data=TEST_DATA)

monkeypatch.setattr("builtins.open", mock)

return mock

@pytest.fixture

def doc():

return PlainTextDocument(filepath="tests/example_file.txt")

def test_create_document(doc):

assert doc.title == "Test Document"

assert doc.author == "Test Author"

assert isinstance(doc.id, int) and doc.id == 1234

def test_empty_file(monkeypatch):

# Mock an empty file

mock = mock_open(read_data="")

monkeypatch.setattr("builtins.open", mock)

with pytest.raises(ValueError):

PlainTextDocument(filepath="empty_file.txt")

def test_binary_file(monkeypatch):

# Mock a binary file

mock = mock_open(read_data=b"\x00\x01\x02")

monkeypatch.setattr("builtins.open", mock)

with pytest.raises(ValueError):

PlainTextDocument(filepath="binary_file.bin")

def test_document_line_count(doc):

assert doc.line_count == 2

def test_document_word_occurrence(doc):

assert doc.get_word_occurrence("test") == 2

tests/test_html_document.py

PYTHON

import pytest

from unittest.mock import mock_open

from textanalysis_tool.html_document import HTMLDocument

TEST_DATA = """

<head>

<meta name="dc.title" content="Test Document">

<meta name="dcterms.source" content="https://www.gutenberg.org/files/1234/1234-h/1234-h.htm">

<meta name="dc.creator" content="Test Author">

</head>

<body>

<h1>Test Document</h1>

<p>

This is a test document. It contains words.

It is only a test document.

</p>

</body>

"""

@pytest.fixture(autouse=True)

def mock_file(monkeypatch):

mock = mock_open(read_data=TEST_DATA)

monkeypatch.setattr("builtins.open", mock)

return mock

@pytest.fixture

def doc():

return HTMLDocument(filepath="tests/example_file.txt")

def test_create_document(doc):

assert doc.title == "Test Document"

assert doc.author == "Test Author"

assert isinstance(doc.id, int) and doc.id == 1234

def test_empty_file(monkeypatch):

# Mock an empty file

mock = mock_open(read_data="")

monkeypatch.setattr("builtins.open", mock)

with pytest.raises(ValueError):

HTMLDocument(filepath="empty_file.html")

def test_document_line_count(doc):

assert doc.line_count == 2

def test_document_word_occurrence(doc):

assert doc.get_word_occurrence("test") == 2Challenge 1: Predict the output

What will happen when we run the following code? Why?

PYTHON

class Animal:

def __init__(self, name: str):

print(f"Creating an animal named {name}")

self.name = name

def whoami(self) -> str:

return f"I am a {type(self)} named {self.name}"

class Dog(Animal):

def __init__(self, name: str):

print(f"Creating a dog named {name}")

super().__init__(name=name)

class Cat(Animal):

def __init__(self, name: str):

print(f"Creating a cat named {name}")

animals = [Dog(name="Chance"), Cat(name="Sassy"), Dog(name="Shadow")]

for animal in animals:

print(animal.whoami())We get some of the output we expect, but we also get an error:

Creating a dog named Chance

Creating an animal named Chance

Creating a cat named Sassy

Creating a dog named Shadow

Creating an animal named Shadow

I am a <class '__main__.Dog'> named Chance

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

Cell In[4], line 22

19 animals = [Dog(name="Chance"), Cat(name="Sassy"), Dog(name="Shadow")]

21 for animal in animals:

---> 22 print(animal.whoami())

Cell In[4], line 7, in Animal.whoami(self)

6 def whoami(self) -> str:

----> 7 return f"I am a {type(self)} named {self.name}"

AttributeError: 'Cat' object has no attribute 'name'We failed to call the super().__init__() method in the

Cat class, so the name property was never set.

When we then try to access the instance property name in

the whoami method, we get an

AttributeError.

Challenge 2: Class Methods and Properties

We’ve mostly focused on instance properties and methods so far, but classes can also have what are called “class properties” and “class methods”. These are properties and methods that are associated with the class itself, rather than with an instance of the class.

Without running it, what do you think the following code will do? Will it run without error?

PYTHON

class Animal:

PHYLUM = "Chordata"

def __init__(self, name: str):

self.name = name

def whoami(self) -> str: